March 6, 2017

“In many ways I see the sound world as still very young. We are still learning how to take advantage of all that we can do.”

Nicholas PopeSound Designer

Featured Products

CQ-1, D-Mitri,

LEOPARD, UPJ-1P

One of the most celebrated musicals of the Broadway season and top contender for multiple Tony Awards, Natasha, Pierre & The Great Comet of 1812 (hereafter abbreviated “The Great Comet”) has earned critical acclaim not only for electrifying performances – notably by Josh Groban as Pierre – but also for the way the performers move freely throughout a set that encompasses nearly the entire audience.

From the outset, The Great Comet was written and designed with audience and performers commingling in a common space. The house is the stage, and vice-versa. Sound design was manageable in the production’s first small venues, but the challenge of keeping amplified voices and instruments – as some orchestra members roam about as well – properly localized in space became increasingly vexing as The Great Comet advanced into larger theatres. When the show prepared to open in Broadway’s expansive Imperial Theatre, sound designer Nicholas Pope was tasked with devising an intricate system that offered a degree of dimensionality and fluidity never before realized on the Great White Way.

A product of Yale University’s esteemed program in theatrical design, Pope has worked as a sound designer for university, regional theatre and cruise ship productions. He served as an associate on two prior productions of The Great Comet, and later became principal designer for the Broadway production.

Nicholas Pope

Nicholas Pope

For those unfamiliar with the show, can you give us some background and outline your involvement?

Pope: Yes, the show started off Broadway in 2012 at Ars Nova in a venue with less than 100 seats. Although the music style required some amplification, the room was small enough so that the direct acoustic energy coming from the performers was sufficient to localize the sound sources reasonably well as they moved around the space. That was largely the case with the tent production in 2013, with about 200 seats, where I came on as an associate. By the time we’d moved to ART in Cambridge, with around 500 seats, localization had become much more of an issue.

And why was the move to Broadway such a challenge? Why did it demand such a sprawling and sophisticated system, and even creation of custom control software?

Pope: The initial intent comes from the artistic end. To me, the actors drive any theatrical piece, and we wanted to ensure that the actors and the audience were always in the same acoustical space so that the audience becomes part of the world of the actors.I needed the actors to be a real entity to everybody. A disembodied voice doesn’t work. It was important to have that connection with the voice at all times; it’s absolutely critical.

And that’s not easily done with this production in a Broadway theatre?

Pope: It’s a new level of complexities. When you step up to a Broadway scale of theatre, due to sheer size of the space it is obvious you can no longer rely on acoustic energy throughout for localization. Yes, you can rely on acoustic energy for anybody who’s sitting near to a performer, but if you get a few rows back you no longer get that sense of intimacy and connection.

I wonder if you could elaborate on why this is even more difficult that it might seem at first glance.

Pope: Some of the difficulties are obvious. If you want to localize the sound of actors and instrumentalists, throughout the space, not just when standing still but while moving, you need loudspeakers all around, a total of 248 in this case. It also means that live mics are in front of the main loudspeakers at all times, which can be a nightmare in itself.And then there are the timing issues. The room is large enough so that when two performers are completely across the room from each other, which occurs often, you are out of musical time because that distance is large enough so that you are no longer on beat. Bringing all of those things back together and keeping individual vocalists and instruments in time with each other when they are spread around distant parts of the auditorium was one of the tricks of the show.And all that perspective changes with location. At one place in the room it is zero time coming off of an instrument that is right beside you, while at another location it is perhaps 70 milliseconds later that information arrives. How do you manage that in the electronic side of things and keep it all organized? That was another trick of the show.

It is mind boggling when you start to think it through. Why did you pick the Meyer Sound D-Mitri digital audio platform as your primary tool for managing all of this?

Pope: I had worked with D-Mitri and its predecessor, the LCS Matrix 3 system, on a number of occasions, and it was pretty much on top of my list the entire time, mainly because it had the largest matrix available at 288 by 288.

So it’s D-Mitri that’s responsible for making sure all the apparent sound sources are right where they need to be, moment by moment?

Pope: Yes, everything goes out through the D-Mitri processing engine. We didn’t have to use all of the 288 ins and outs, but I think we’re pushing 250 on both sides. It’s the backbone of the whole show. It’s a robust system that really sets the standard in its category. Meyer Sound is very much on the leading edge of the trend in this department.

And, on the software side, you are using Meyer Sound’s Spacemap for panning – with some custom additions, correct?

Pope: Yes, on one level we are taking heavy, heavy advantage of the mapping capabilities of Spacemap throughout the show. All localization of sounds runs through the basic cueing architecture of Spacemap. But we did have to devise a new front-end graphical interface to run it for this show.

And why is that?

Pope: It’s not because there’s anything inherently wrong with Spacemap’s own interface. I have used it many times, and it works well for basic programmed multi-dimensional panning. It’s perfect for applications like theme parks, spectaculars like Cirque du Soleil, and even most theatre applications. It’s fine if you only have a dozen or two panning trajectories in the show. But when you have hundreds going on, many of them simultaneously, and you need to make on-the-fly modifications in real time, you need something different.

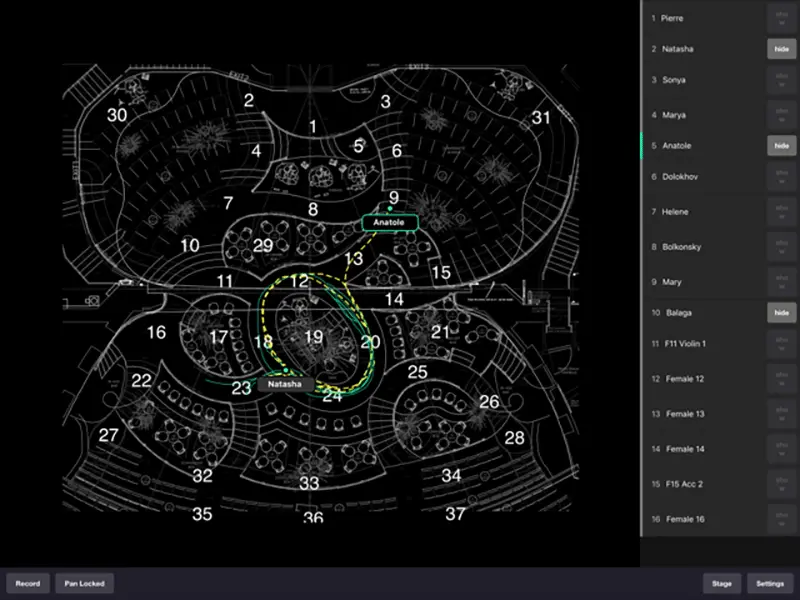

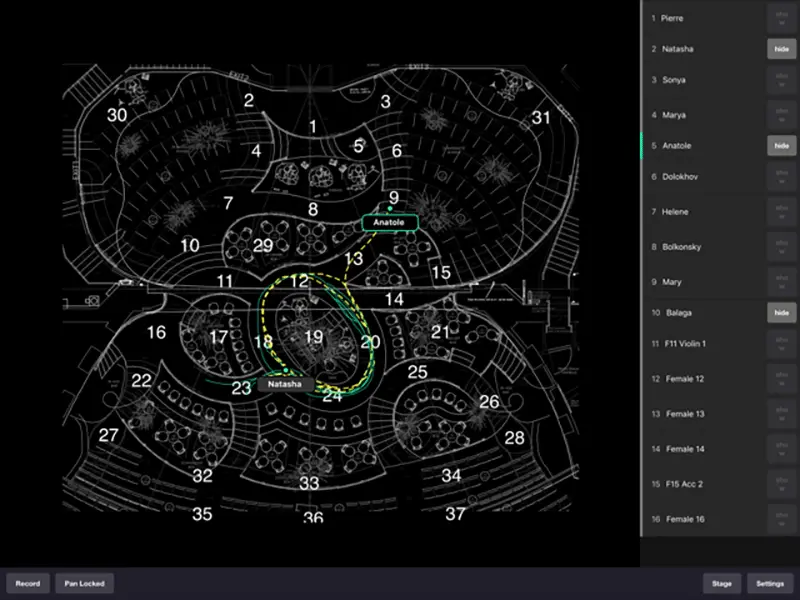

The custom iPad interface for Spacemap shows programmed trajectories of performers moving about the set. The “follow sound” operator can make adjustments to the panning matrix in real time on the touchscreen.

The custom iPad interface for Spacemap shows programmed trajectories of performers moving about the set. The “follow sound” operator can make adjustments to the panning matrix in real time on the touchscreen.

We understand that not only is your D-Mitri system the largest ever used on Broadway, but that yours is the most complex Spacemap program ever attempted. How do you make it work in the end?

Pope: Well, we hired a couple of computer programmers, Jake Zerrer and Gabe Rives-Corbett, and then working with wonderful cooperation from Meyer Sound, we built a front-end graphical interface that can be controlled in real time with an iPad. The screen shows a layout of the theatre and allows the operator to follow the programmed trajectories of the performers through the space and make any needed adjustments in real time by touch.

We’ll return to that in a moment, but it might help to understand first where the loudspeakers are placed and how you decided which loudspeakers to use.

Pope: I spent a lot of time working with Vectorworks files in 3D to figure out where I needed loudspeakers to ensure I could follow the actors and musicians anywhere in the space. And then I used acoustical prediction programs like Meyer Sound’s MAPP Online Pro to figure out what loudspeakers would give me the coverage I needed and still fit in the space allowed. It was an intense and laborious process!

And why Meyer Sound loudspeakers for the main system?

Pope: One of the advantages I find with Meyer Sound speakers, almost universally across the board, is that they play very nicely together. Doing clusters of various speakers works very well with Meyer Sound loudspeakers, which became very important in this show.

The main lifting speakers in the show are the

LEOPARD arrays and also CQ-1 loudspeakers, an older cabinet but one that still works very well. We also have a lot of UPJ-1P VariO loudspeakers that support the main clusters, and again having speakers that work well when set next to each other proved to be extremely important.

And why the LEOPARD line arrays as the main anchor of the system? Had you worked with them before?

Pope: I had never worked with the LEOPARD line arrays nor had I heard them before I started my design. But I had heard very good things about them. It really came down to making sure I had the coverage pattern that I needed. There are only a couple of companies that I would trust for my mains and Meyer is certainly one of them. So we ended up going that route and I’m happy we did. They sound wonderful.

Was it a challenge getting all the loudspeakers hung where you wanted them?

Pope: After I went through the process of just figuring out what the PA might look like then it was time to have discussions with my fellow designers. There is a lot going on above the audience in the show. I don’t know how many chandeliers there are – perhaps upwards of forty – that take up airspace, and obviously there are hundreds of lighting fixtures. Bradley the lighting designer has similar concerns because he must have an open-air space in order to get light where it needs to be. So the negotiating process between the two of us and Mimi, the set designer, was pretty intense – though it was friendly. We were all working together for a common cause. But we would have times when we would really have to look hard at moving things just a few inches one way or another. That took a long time!

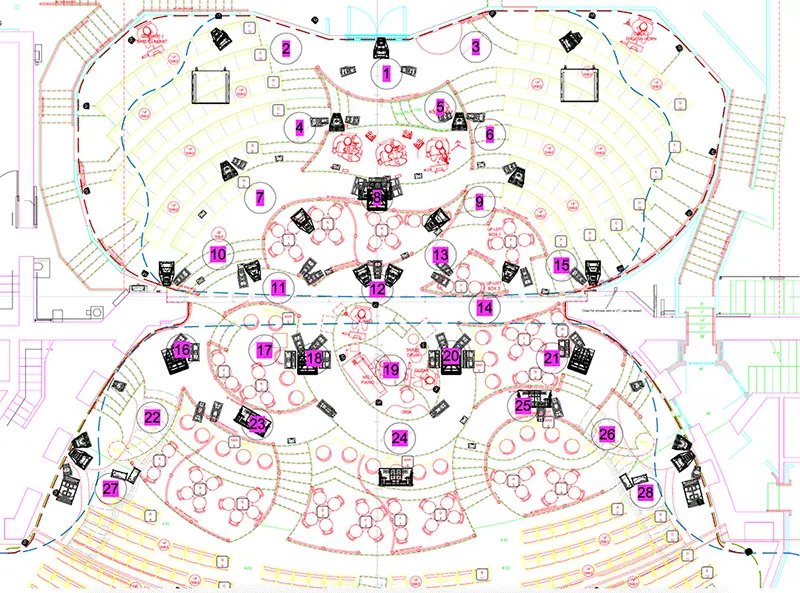

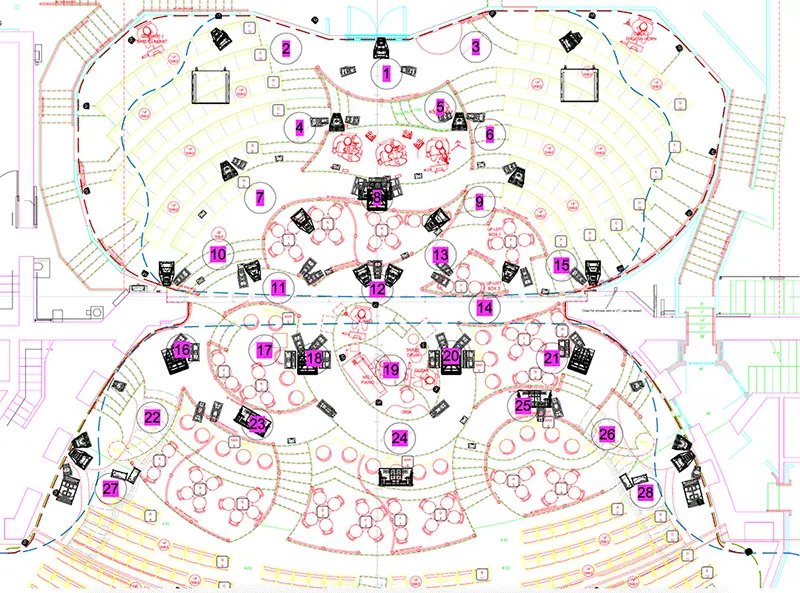

The numbered “narrative locations” are used as reference points for localization panning, shown here in relationship to the Meyer Sound loudspeaker arrays.

The numbered “narrative locations” are used as reference points for localization panning, shown here in relationship to the Meyer Sound loudspeaker arrays.

You identified about three dozen places in the theatre, called “narrative locations,” to use as a framework for locating loudspeakers and programming movements in the matrix. How does that work?

Pope: Narrative locations was a way we started talking about where people would be in the performance, and it became the backbone we used to build the programming of the show. We started building the speaker plots using these positions, where I knew I needed to localize the sound of the performers. But as it is done in Spacemap it is truly fluid, because you can pan three dimensions among all the loudspeaker source locations. So although those serve as the building block locations, the way the show functions is not to move directly from one location to another because they actually live in a processing environment that encompasses the entire space. Movements can be fluidly panned in any direction.

So performers don’t have to be on those exact spots to be properly localized?

Pope: Not at all. True, there are moments in the show where we don’t need to move people and they happen to be standing right on a number. So we simply put them on that number in the Spacemap program. There is a some of that in the show because that’s how it was blocked by the show’s director, Rachel Chavkin. But people also do move a fair amount while they are talking, singing or playing instruments, and at any time it is totally fluid the way the sound follows them.

This is considerably more complicated than localizing performers left to right across a proscenium, correct?

Pope: Yes, localization has been done on Broadway for some time, but usually it is simply moving sounds in a plane from left to right in three or four zones of depth. If you are sitting in a traditional theatre with a proscenium stage then the maximum angle of you to the performers, depending on where you are seated, could be as small as 20 degrees side to side, and it’s rarely more than 90 degrees up front. There is not much differentiation between one extreme and the other for a large percentage of the audience.But for a significant portion of the audience in this show, your experience is 360 degrees. The performers could literally walk around you and they do so many times throughout the show. It’s one thing for the sound localization to be off by 15 or 20 degrees but it’s another thing for the sound to be off a full 180 degrees. You’re looking at somebody in front of you and their voice seems to be coming from behind you. That would be a problem, and likely would pull you out of the story.

And to make this work smoothly, you’ve added another member to the sound team, the “follow sound” operator.

Pope: Yes, Scott Sanders is our localization operator and he is responsible for 30 people, which means the equation is not quite as balanced as the one-to-one of a follow-spot operator. So there is an intense amount of up pre-programming in the show. Then Scott is responsible for babysitting the most important aspects of movement, and he definitely does do live grabs to adjust performers throughout the show — which is where the iPad really comes in handy as it allows him to do that intuitively and quickly.

Your main mixing console is a DiGiCo SD7, but all of the outputs are on the D-Mitri engine. How did you make that work?

Pope: Basically, we took the input side of the DiGiCo and spliced it into the D-Mitri system for matrixing and panning. It took a lot of AES3 cables, but it’s a solid connection. It will be a whole lot easier to do this sort of thing when all the manufacturers can all get together on a common networking standard. I’m certainly looking forward to that day.

This show has put you on the leading edge of sound design. Is this a new trend for Broadway? Or will others hold back, thinking it’s really not worth the trouble?

Pope: We really did push the envelope with this one. Sam Ellis, our tech supervisor is a seasoned veteran on Broadway and there was more than one time when he would say, “Well, I’ve never done that one before” or “That’s the most of such-and-such I’ve ever had to do!”Ultimately, though, it’s not a sound design question but rather a question of the way directors and designers approach a show. The proscenium theatre has been around a long time and is working very well even with the newer shows. But I like this concept where the audience becomes more an integral part of the story. We will see.As far as the audio end of things, in many ways I see the sound world as still very young. We are still learning how to take advantage of all that we can do. I think people are still figuring out the possibilities as we get more and more tools at our disposal, which in turn opens new realms of creative thinking.At one level the professional sound world is incredibly technical, yet I think it should be driven artistically. Building interfaces between those two worlds is one of the fruitful areas left. And to me that was a big part of this project. Conceptually, thinking of how to move the sound around though multiple spaces is simple. Even a kid would say, ‘Hey if somebody is singing over there, then their voice should sound like it is coming from over there.” But when you try working that out from an engineering standpoint it becomes mind-boggling. Making those two worlds meet is where there are still a lot of challenges left.

Nicholas Pope would like to thank the entire audio crew for their contributions to the success of the show.

Audio crew:

FOH engineers: Walter Tillman, Scott Sanders

Backstage/RF: Jim Bay, John Cooper

Production engineer: Mike Wojchik

Associate sound designers: Sam Lerner, Charles Coes

Sound assistants: Beth Lake, Steve Dee

Audio equipment supplier:

Masque Sound

Featured Products

CQ-1, D-Mitri,

LEOPARD, UPJ-1P

Nicholas Pope

Nicholas Pope The custom iPad interface for Spacemap shows programmed trajectories of performers moving about the set. The “follow sound” operator can make adjustments to the panning matrix in real time on the touchscreen.

The custom iPad interface for Spacemap shows programmed trajectories of performers moving about the set. The “follow sound” operator can make adjustments to the panning matrix in real time on the touchscreen. The numbered “narrative locations” are used as reference points for localization panning, shown here in relationship to the Meyer Sound loudspeaker arrays.

The numbered “narrative locations” are used as reference points for localization panning, shown here in relationship to the Meyer Sound loudspeaker arrays.